Wells Fargo had a quality oversight unit. They had compliance teams. They had internal investigations. And for over a decade, agents opened more than two million fake accounts while all of that oversight was in place.

The monitoring systems caught individual incidents. Management treated them as isolated misconduct. The sampling-based approach found problems here and there, and every time, someone decided it was a one-off. Meanwhile, the pattern was growing across thousands of agents and millions of interactions that nobody was reviewing.

When it finally blew up, it cost Wells Fargo $3 billion in fines and settlements.

People call it a fraud scandal. I call it a QA failure. The oversight existed. The sampling just wasn’t enough to see the pattern.

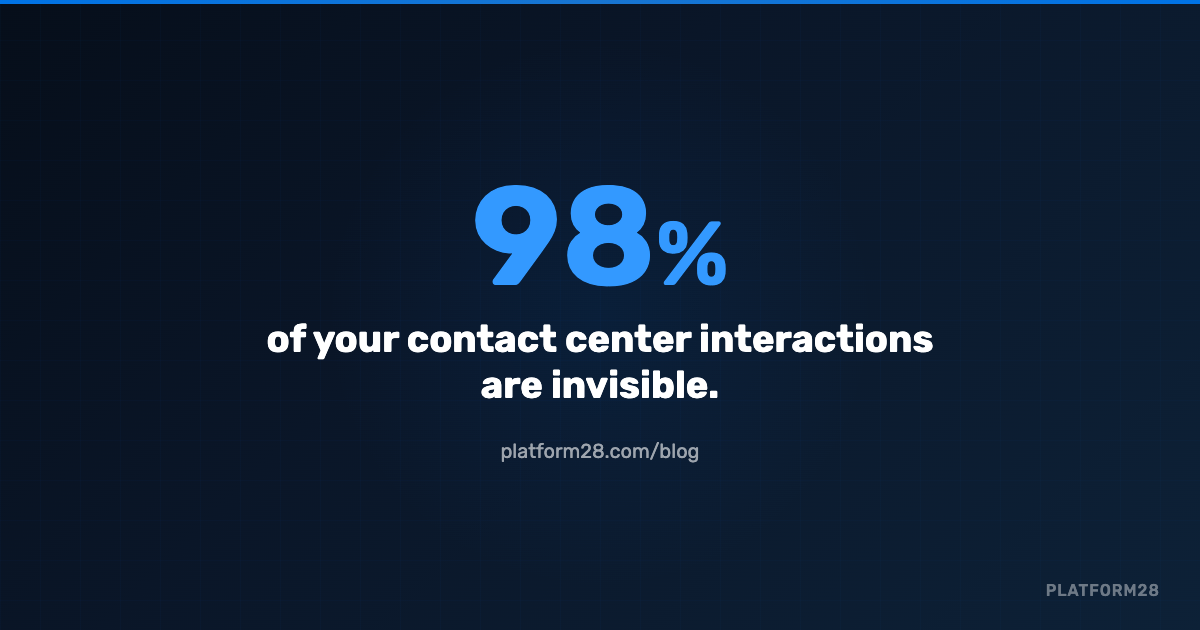

And some version of this is happening in your contact center right now. Not at Wells Fargo’s scale. But the same blind spot, the same math, the same false confidence that a small sample tells the whole story.

The Math That Nobody Talks About

Most contact centers review 2% to 5% of interactions. A QA analyst pulls a call, listens to it, scores it against a rubric, writes notes, delivers coaching. That takes 15 to 30 minutes per interaction. Even a dedicated team can only get through a handful per day.

For a center handling 20,000 interactions a month, a 2% sample means 400 get reviewed. The other 19,600 disappear.

You evaluate 8 to 10 calls per month for an agent who handles thousands. Everything looks fine for three or four months. Then in month five, you catch them giving a caller completely wrong information. Or skipping identity verification. Or making promises the agency can’t keep.

Was it a one-time thing? Has it been going on for months? Do you go back and listen to hundreds of recordings to find out? How many callers already walked away with bad information?

In most organizations, the agent gets coached or fired and the issue gets swept under the rug. Nobody ever knows how much damage was done in the months nobody was listening.

When the Caller Is a Citizen, Not a Customer

In a commercial contact center, a bad interaction costs you a customer. In a government contact center, a bad interaction can change someone’s life.

A citizen calls about Medicaid eligibility. The agent gives them wrong information. That person doesn’t apply for benefits they qualify for. They make decisions about their family based on guidance that was never accurate. The consequences aren’t measured in churn rates.

And that call was in the 98% nobody reviewed.

Government contact centers operate under strict compliance requirements — identity verification before disclosing case information, mandatory disclosures, specific protocols for different interaction types. A missed verification step isn’t just a quality issue. It’s a reportable compliance incident. But if nobody reviews the call, nobody reports it.

AI Doesn’t Sample. It Flags.

AI-powered QA doesn’t review a random 2%. It scores every interaction — every call, chat, email, and SMS — against the agency’s specific criteria. On every single transcript, the AI evaluates:

- Did the agent verify the caller’s identity before disclosing case information?

- Was the information provided accurate and consistent with current policy?

- Were required disclosures and disclaimers delivered?

- Did the agent make promises the agency can’t fulfill?

- Is there a safety concern that requires immediate attention?

Every transcript. Same rubric. No scorer bias. No blind spots.

But scoring every interaction isn’t even the most important part. The real shift is real-time alerting.

Agent discloses case information before verifying the caller’s identity? Flagged immediately. Agent gives eligibility guidance that contradicts current policy? A supervisor gets an alert during the call — not in a random review three weeks later. Caller sentiment drops or someone mentions harming themselves? Surfaced in real time so someone can intervene.

One insurance claims center that previously monitored less than 3% of calls started catching agents providing incorrect information in real time. Supervisors sent corrections via chat while the call was still happening. Contact centers using real-time AI monitoring are seeing 40% fewer escalations and 35% fewer complaints reaching regulatory bodies.

Your QA team doesn’t disappear. They stop spending their days listening to recordings and start focusing on the patterns and coaching opportunities the AI surfaces for them. The volume gets handled automatically. The judgment stays human.

What We’ve Seen

A state agency handling child protective services intake was processing over 20,000 interactions per month. Their QA team reviewed about 400 — the standard 2%.

After moving to AI-powered quality management, every interaction was scored. Case handling time dropped 70%. Agents received targeted coaching based on complete performance data instead of a random handful of recordings. The QA team’s job got better, not smaller — they stopped listening to calls all day and started improving the operation.

The Bottom Line

If your QA program reviews 2% of interactions, you don’t have a quality program. You have a spot-check.

The industry accepted 2% as normal because it was the best anyone could do with human reviewers. That limitation doesn’t exist anymore.